DOCS: A New Method to Unlock Deeper Insights into Large Language Models

Large Language Models (LLMs), powered by transformer architectures, have achieved impressive results across a wide range of natural language processing (NLP) tasks. However, despite their success, understanding the internal mechanisms that drive their performance remains a major challenge. While traditional analysis techniques focus on the output of these models or the learned representations, few have delved deeply into the weight matrices themselves—the core components that govern how LLMs operate internally.

In this groundbreaking research, a novel index designed to measure the similarity between weight matrices across different layers of LLMs. By using DOCS, the study uncovers intriguing patterns in LLM architectures, providing new insights into the functional organization of these models. The findings offer not only a better understanding of how LLMs are structured but also suggest new avenues for improving model efficiency and interpretability.

Understanding the Core Concepts

Let’s break down the key concepts from the paper to fully grasp its significance:

-

Weight Matrices in LLMs: These are the parameters that govern the transformation of input data across the layers of a neural network. In transformer-based models like LLMs, each layer has a set of weights that determines how information is processed, passed, and transformed.

-

Cosine Similarity: This is a metric used to measure the cosine of the angle between two vectors, providing a sense of how similar they are in direction. In this context, it helps quantify the similarity between weight matrices of different layers.

-

DOCS Index: This new method quantifies weight similarity by calculating the distribution of cosine similarities between the weight matrices of LLM layers. DOCS is specifically designed to overcome the limitations of existing similarity metrics that fail to effectively differentiate between orthogonal matrices, which are commonly used in LLMs.

-

Orthogonal Matrices: These are matrices where the rows and columns are perpendicular to each other. In LLMs, orthogonal matrices are often used during initialization to preserve gradient flow and improve training stability. However, most similarity metrics struggle to capture meaningful differences between orthogonal matrices, which DOCS addresses.

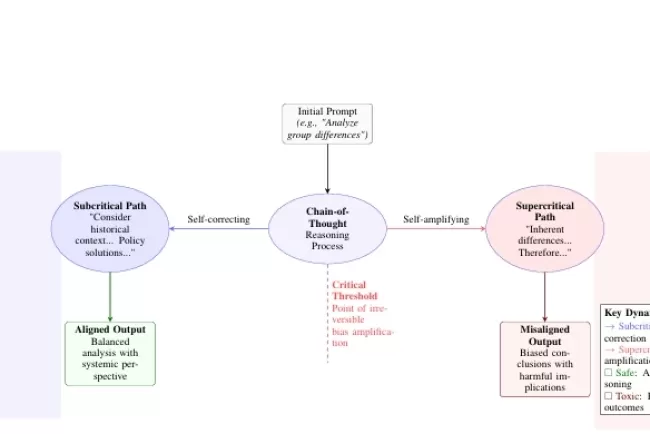

The Challenge Addressed by DOCS

Understanding the internal structure of LLMs is crucial for improving their performance and interpretability. Existing techniques for analyzing LLMs focus primarily on representations—i.e., the output of the model layers—but these representations do not always correspond directly to the weight matrices that govern how the model processes data. The challenges with existing methods include:

-

Representation vs. Weight Similarity: Similarities in the output of different layers (representations) do not necessarily imply similarities in the weight matrices. The presence of residual connections in transformer architectures allows information to bypass certain transformations, leading to similar outputs even if the underlying weight matrices are distinct.

-

Non-Discriminative Metrics for Orthogonal Matrices: Many existing similarity indices, like Canonical Correlation Analysis (CCA) and Singular Vector Canonical Correlation Analysis (SVCCA), fail to distinguish between orthogonal matrices. This limitation is particularly important in LLMs, where orthogonal weight matrices are prevalent during training, and such matrices may look identical according to traditional similarity indices even if they are quite different in their functional behavior.

DOCS directly addresses these challenges by providing a metric that accurately measures the similarity between weight matrices while accounting for the specificities of orthogonal matrices.

A New Approach: The DOCS Index

Introduce DOCS as a powerful tool for assessing weight similarity across LLM layers. Here’s how it works:

-

Distribution of Cosine Similarity: DOCS computes the cosine similarity between the weight matrices of different layers and analyzes the distribution of these similarities. This enables the method to capture subtle nuances in the relationships between layers, revealing deeper insights into the model's structure.

-

Handling Orthogonal Matrices: Unlike other similarity indices, DOCS is specifically designed to differentiate between orthogonal matrices, ensuring that meaningful variations in weight matrices are captured. This makes DOCS particularly valuable for analyzing LLMs, which often rely on orthogonal matrices to maintain stability during training.

-

Revealing Layer Behavior: The analysis with DOCS shows that adjacent layers in LLMs often exhibit high weight similarity, suggesting that neighboring layers are functionally specialized but share many similar weight configurations. This finding aligns with recent studies that highlight redundancy and functional overlap across layers in transformer-based models.

Key Findings and Implications

The DOCS analysis provides several key insights into LLM architectures:

-

Adjacent Layers Exhibit High Weight Similarity: One of the major findings is that adjacent layers in LLMs tend to have highly similar weight matrices. This suggests that each layer may be specialized to handle a particular aspect of the data processing, but many of these layers share overlapping functionalities, leading to weight similarity.

-

Clusters of Similar Layers: Beyond adjacent layers, DOCS reveals clusters of layers with similar weights. For instance, layers 7–12 in certain models form a cluster, showing mutual similarity that is approximately double that of other layer pairs. This challenges the common practice of using uniform layer configurations and suggests that models might benefit from customized layer structures that better leverage these clusters.

-

The Impact of Instruction Tuning: By comparing the weight matrices of base models and instruction-tuned models, DOCS helps reveal how instruction fine-tuning affects the internal weight structure. This offers valuable insights into which parts of the model are most affected by fine-tuning and how the weight matrices evolve during this process.

-

Insights into Mixture of Experts (MoE) Models: The research also examines the weight similarities between experts in pre-trained MoE models, providing a deeper understanding of how different experts within the model may specialize in different tasks and whether their weights are significantly different.

Implications for Model Optimization

The insights gained from DOCS can help improve model design and optimization:

-

Layer Customization: The discovery of clusters of similar layers suggests that LLMs may benefit from designs where layers within a cluster are optimized differently from those outside the cluster. This could lead to more efficient models that reduce computation while maintaining performance.

-

Better Model Tuning: By understanding how instruction fine-tuning affects weight matrices, developers can better target specific parts of the model for fine-tuning, improving both efficiency and interpretability.

-

Revisiting Uniform Layer Configurations: The clustering of similar layers challenges the common assumption that all layers should have the same size or configuration. Future model designs could take advantage of these findings to optimize the structure of LLMs, potentially improving their performance and reducing computational costs.

Conclusion: Unlocking Deeper Insights into LLMs

The introduction of DOCS provides a novel and effective method for analyzing the internal weight matrices of Large Language Models. By offering a more nuanced measure of weight similarity—especially for orthogonal matrices—DOCS opens up new possibilities for understanding and optimizing LLM architectures. The findings highlight the importance of adjacent layer similarity, the potential for layer clusters, and the impact of instruction fine-tuning, offering valuable insights for future model design and optimization.

As LLMs continue to evolve, techniques like DOCS will be crucial for gaining deeper insights into their inner workings, paving the way for more efficient, interpretable, and powerful models in the future.

What’s Your Take?

How do you think the discovery of weight similarity patterns in LLMs could influence future model design? Could this approach be applied to other types of neural networks or architectures beyond transformers? Let us know your thoughts!

What's Your Reaction?