Building 3D Abstractions with Transformers: Inverting Procedural Models for Visual Quality Inspection

In the evolving field of 3D graphics and architectural modeling, there’s growing interest in abstractions—simplified, high-level representations that capture the essential geometry and structure of buildings. These abstractions are not just useful for architects or designers but play a key role in a variety of industries, including urban planning, gaming, and training AI systems. However, creating abstractions from raw data like point clouds—3D representations of physical environments—is no small task. Traditionally, this process has been hampered by the lack of efficient methods and abundant labeled data.

But what if there was a way to automatically infer these abstractions from point cloud data using a machine learning model? That's the question tackled in the innovative research paper titled "Synthesizing 3D Abstractions by Inverting Procedural Buildings with Transformers" by Maximilian Dax, Jordi Berbel, Jan Stria, Leonidas Guibas, and Urs Bergmann.

This paper presents a transformer-based framework that inverts procedural building models—commonly used in the gaming and animation industries—into abstract 3D representations. The model learns to generate programmatic descriptions of building abstractions, enabling a new approach to urban modeling, asset generation, and even AI training for robotics and simulations.

What Are 3D Abstractions, and Why Are They Important?

A 3D abstraction of a building isn’t about capturing every intricate detail of its structure; instead, it focuses on the key elements—height, footprints, storeys, and facade types—that convey its essential geometry. This abstraction allows for efficient processing, comparison, and visualization of buildings, making it ideal for applications such as:

- Urban planning: Quickly generating 3D city models for simulations or analysis.

- Synthetic data generation: Creating virtual environments for training AI agents.

- Asset management: Identifying and tracking the condition of buildings using simple yet informative 3D representations.

However, extracting these abstractions from sensor data like point clouds is a challenging problem, especially when a lack of large-scale labeled datasets limits traditional approaches. That’s where the power of deep learning and transformers comes in.

The Transformer Solution: Learning to Invert Procedural Models

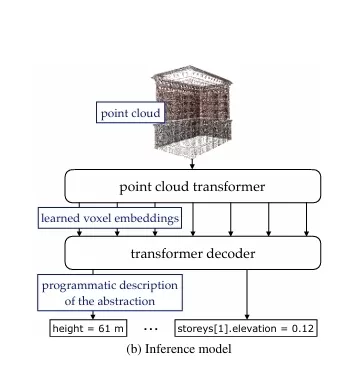

The core idea behind this research is to invert procedural models using a transformer model. Procedural models, which are widely used in gaming and animation for creating complex environments, define buildings in terms of sets of rules and assets (like windows, walls, and floors). The goal here is to take a point cloud—a collection of 3D points captured by sensors—and learn to predict the underlying procedural description of the building.

This is a bit like taking a complex, detailed photo of a building and converting it into a high-level sketch that highlights its most important structural features. The research uses a supervised training approach where the model learns from a synthetic dataset of procedural building abstractions paired with corresponding point clouds.

Key Components of the Approach:

- Point Cloud Renderer: A renderer generates synthetic point clouds from the procedural building abstractions, effectively pairing the abstraction with a realistic 3D representation.

- Transformer Decoder: This decoder takes the point cloud as input and predicts the corresponding programmatic description of the building’s abstraction.

- Programmatic Language: A custom data format based on Protocol Buffers is used to represent building abstractions efficiently. This format encodes the structure and geometry of buildings in a modular, hierarchical manner.

- Training Objective: The model is trained to minimize the Kullback-Leibler divergence between the predicted abstraction and the true abstraction, effectively learning the inverse of the procedural model.

The Power of Transformers in 3D Abstraction

Why choose transformers for this task? Transformers have become the go-to architecture for sequence-to-sequence learning tasks, particularly in NLP and vision. In this case, the transformer is used to encode the point cloud (represented as voxels) and decode it into a programmatic language description. The transformer’s ability to capture long-range dependencies and handle large-scale inputs makes it particularly suited for inferring 3D abstractions from complex, unstructured point cloud data.

The model's architecture is based on an encoder-decoder setup. The encoder processes the point cloud input, while the decoder generates the corresponding programmatic description. By training this model on a dataset of 341,721 pairs of point clouds and abstractions, the authors achieved impressive performance in terms of both geometric accuracy and structural consistency.

Evaluating the Performance of the Model

The evaluation of this transformer-based model for 3D abstraction synthesis focused on several key metrics:

- Geometric Reconstruction: The model achieved high accuracy in terms of geometry, accurately predicting the building’s height, storey layout, and facade design from the point cloud.

- Structural Consistency: Even when the input point cloud was incomplete (e.g., missing parts of the building due to occlusion), the transformer was able to generate structurally consistent abstractions, filling in missing details in a plausible way.

- Inpainting: The model demonstrated strong performance in "inpainting"—filling in incomplete or noisy data—with accurate results, showing its robustness in real-world applications.

Limitations and Future Directions

While the transformer model performed well, the authors noted some limitations:

- Procedural Model Constraints: The primary limitation lies in the procedural models themselves, as they may not capture all the nuances of real-world buildings. Enhancements to these models will be critical for improving real-world applicability.

- Data Augmentation: While the model performed well within the distribution of training data, further data augmentation could help it generalize to more diverse building styles and environments.

- Flexibility: The researchers suggest that making procedural models more flexible and adaptable will be crucial for real-world use cases, especially for buildings with unique or irregular designs.

Real-World Applications of 3D Abstractions

This research has exciting potential for real-world applications:

- Urban Planning: Cities can use abstractions to quickly model their architecture, simulating the impact of new buildings on infrastructure or aesthetics.

- Game Development and Virtual Environments: Developers can create synthetic environments for training AI agents, enabling more efficient learning in complex 3D spaces.

- Smart Cities and Asset Management: Building abstractions can be used in asset management systems to track building conditions and optimize maintenance schedules.

Conclusion: The Future of 3D Abstraction Generation

In summary, the work of Dax et al. introduces a powerful framework for generating 3D building abstractions from point cloud data using transformers. By inverting procedural building models, the framework can efficiently capture the essential geometry and structure of buildings, paving the way for a wide range of applications from urban planning to AI training. As procedural models continue to evolve and become more flexible, the ability to generate realistic and adaptable abstractions will be a crucial tool in advancing the digital modeling of the built environment.

Stay Informed: Interested in how transformers are shaping the future of 3D modeling and urban planning? Subscribe to our blog for more insights into the latest advancements in AI-driven design and simulation.

Join the Conversation: What are your thoughts on using transformers for 3D abstraction generation? Let us know in the comments below or connect with us on social media!

What's Your Reaction?