Contextual Reinforcement: A Breakthrough in Token Compression for Multimodal Large Language Models

In the rapidly evolving world of natural language processing (NLP), large language models (LLMs) have revolutionized the way we interact with AI, powering everything from conversational agents to multimodal content generation. However, as these models scale up to handle more complex and diverse datasets—often incorporating multiple forms of data such as text, images, and audio—the efficiency of how these models manage and compress tokens becomes a crucial issue. Token management affects everything from the model's performance to its computational costs, and as datasets grow in size and complexity, traditional token compression methods struggle to keep up.

A recent study introduced a novel approach to token compression using a concept known as contextual reinforcement. This mechanism dynamically adjusts the importance of tokens by accounting for their interdependencies and semantic relevance, allowing large models to achieve substantial reductions in token usage while maintaining the richness and coherence of information representation. The study’s findings promise to address many challenges in efficiently processing multimodal data, which is vital for real-world applications like advanced AI chatbots, automated content generation, and more.

Understanding the Core Concepts

Let’s break down some of the key terms and ideas central to this research:

-

Token Compression: This refers to the process of reducing the size of the input data tokens without losing critical information. In large language models, token compression is vital for managing the immense volume of data while keeping the computational load manageable.

-

Multimodal Data: Multimodal datasets involve multiple types of data (e.g., text, images, videos). For instance, a model might need to process both text and images to generate descriptions of photos or answer questions about videos.

-

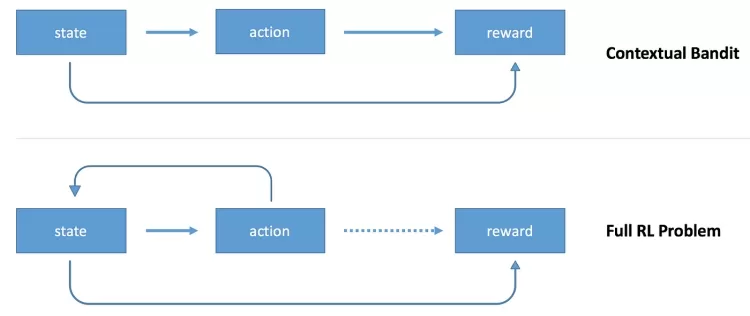

Contextual Reinforcement: This new approach uses the relationships between tokens (whether they are words, phrases, or other data points) to dynamically adjust their importance during compression. This method learns which tokens are crucial for understanding the context and which can be safely ignored or compressed further.

-

Reinforcement Mechanisms: Inspired by cognitive processes in humans, reinforcement in this context refers to adjusting the focus or weight of certain elements based on their relevance to a task or decision, ensuring the most relevant parts of the data are preserved.

The Challenge Addressed by the Research

As models scale up, they face two major challenges:

-

Efficient Token Management: The sheer volume of data requires models to compress tokens to reduce memory and computational overhead. However, traditional methods often rely on predefined rules or heuristics that fail to capture the nuanced relationships between tokens in real-world data.

-

Multimodal Complexity: When models deal with multimodal data, they must understand how different types of data (like text and images) interact. Conventional token compression methods struggle to manage these interactions effectively, often leading to suboptimal data representations.

The study introduced contextual reinforcement as a solution to these challenges. This method enables models to prioritize semantically significant information and adjust token compression dynamically, taking into account both local and global context.

A New Approach:

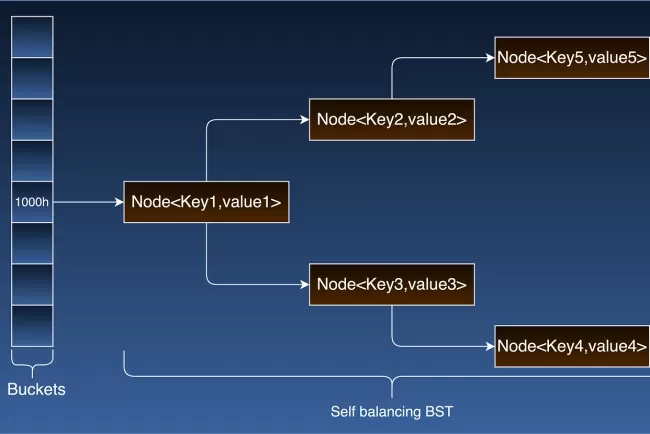

The researchers introduced contextual reinforcement into token compression pipelines, making a significant departure from traditional methods. Rather than compressing tokens in isolation, this approach evaluates how tokens relate to one another. By using graph-based algorithms and adaptive weighting, the model can assess token relevance dynamically. Here’s how it works:

-

Dynamic Adjustment of Token Importance: Instead of compressing all tokens uniformly, the model can recognize which tokens are critical for understanding a particular context (e.g., which words or features are crucial in an image caption) and which are less important.

-

Improved Efficiency and Accuracy: By incorporating reinforcement-based mechanisms, the model can achieve substantial reductions in token usage without sacrificing the quality of the information. This leads to better computational efficiency while preserving the accuracy and semantic integrity of the model’s outputs.

-

Cross-Modal Performance: The contextual reinforcement mechanism enhances multimodal interactions by ensuring that the relationships between different types of data—such as text and image—are effectively captured, leading to improved performance in tasks requiring detailed cross-modal interactions.

Implications for the Field

This research has several important implications:

-

Practical Applications: The improved token compression and efficient handling of multimodal data can make large-scale language models more accessible and practical for real-time applications. This includes areas like conversational systems, where multiple forms of input (e.g., text and voice) are processed simultaneously, and content generation, where the interaction between text, images, and videos is crucial.

-

Computational Efficiency: With token compression that reduces redundancy while preserving critical information, models can process larger datasets with less memory and fewer computational resources. This translates into lower costs for deploying these models at scale, particularly in resource-constrained environments.

-

Future Research Directions: This approach opens up possibilities for further innovations in token management. Future research could explore how to refine the reinforcement mechanisms, handle even more complex datasets, or make the system more adaptable to different domains and languages.

Conclusion: A Step Forward in Token Management for Large Language Models

By introducing contextual reinforcement, the researchers have taken a bold step toward rethinking how token compression is handled in large language models. This innovative approach not only enhances the efficiency and performance of the models but also offers new avenues for optimizing the processing of multimodal data. The research demonstrates that it is possible to balance computational efficiency with high-quality, context-rich representations, making large-scale models more scalable and adaptable for real-world applications.

As AI systems continue to grow more complex and data-intensive, the methods proposed in this study may play a critical role in the next generation of language models, enabling them to better understand and process the rich, multimodal world we live in.

What’s Your Take?

How do you think the use of contextual reinforcement could impact the future of AI, especially in handling complex, multimodal datasets? Do you see this approach being applied to other areas like vision or speech recognition? Share your thoughts!

What's Your Reaction?