Unlocking the Secrets of Reinforcement Learning: Modular Interpretability and Community Detection in RL Networks

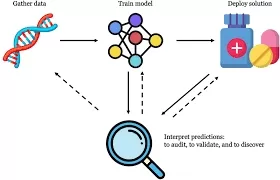

In recent years, Deep Reinforcement Learning (RL) has become a transformative force in the world of artificial intelligence, allowing machines to learn optimal strategies through trial and error. From game-playing agents like AlphaGo to autonomous systems navigating complex environments, RL has proven its ability to improve performance across diverse domains. However, one major hurdle remains: interpretability. As RL models become more complex, understanding how they make decisions becomes increasingly challenging, yet it is crucial for ensuring transparency, trust, and effective deployment in real-world applications. This is where the research paper, “Induced Modularity and Community Detection for Functionally Interpretable RL”, steps in to offer a fresh perspective on how to make RL networks more interpretable.

In this blog post, we’ll dive into how modular interpretability—inspired by cognitive science—can provide insights into RL decision-making, offering a framework that aligns with how humans process information.

The Challenge of Interpretability in RL

Deep reinforcement learning excels in making complex decisions, but this comes at the cost of interpretability. The “black-box” nature of these models means that while they can achieve remarkable performance, understanding the reasoning behind their decisions is often elusive. In safety-critical applications such as healthcare or autonomous driving, having clear explanations for model behavior is not just beneficial; it's essential.

However, the definition of interpretability itself remains ambiguous. What does it really mean for an RL model to be interpretable? According to Lipton (2016), interpretability is about creating an explanation that a human can understand and predict, ideally with a reasonable amount of effort. It involves balancing between simplifying a model’s explanation and maintaining its accuracy. But how can we achieve this with complex RL systems?

Cognitive Science Meets RL

Drawing inspiration from cognitive science, the authors of the paper propose an innovative approach to modular interpretability. Cognitive science often views the brain as a collection of specialized, semi-encapsulated modules that process information in parallel. This modular structure aids in human decision-making and learning, suggesting that the same approach could help explain how RL systems make decisions.

The central idea is simple yet powerful: If we could structure the RL network into functional modules—each module handling specific decision-making tasks—these modules could potentially offer interpretable "chunks" of information that align with how humans understand decisions.

Key Contributions of the Research

The authors introduce several groundbreaking ideas to enhance the interpretability of RL networks. Here's a closer look at the core contributions:

1. Encouraging Locality in Neural Networks

Building on recent bio-inspired algorithms, the paper demonstrates that penalizing non-local weights within a neural network encourages the emergence of semi-encapsulated functional modules. By introducing locality constraints during training, the network self-organizes into regions that focus on specific tasks. This form of functional modularity mimics the way humans tackle problems by breaking them down into smaller, manageable parts. The result? More interpretable decision-making processes within RL agents.

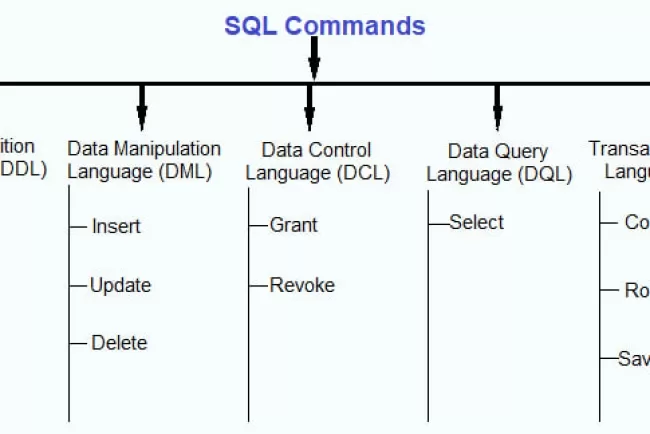

2. Automating Community Detection in Neural Networks

The paper leverages community detection methods to identify and define these functional modules. Using the Louvain algorithm and spectral analysis of the adjacency matrix, the authors show that it’s possible to automatically detect modules within a neural network. This automated process offers an efficient way to scale interpretability efforts, even in large and complex RL systems. Rather than manually analyzing the inner workings of a network, these methods provide an algorithmic way to detect meaningful sub-networks or modules.

3. Characterizing Detected Modules

Once functional modules are detected, the next step is understanding what each module actually does. The authors propose a direct intervention approach: By modifying network weights before inference, researchers can observe how each module behaves, providing a verifiable interpretation of the module’s function. This means that each module’s role in decision-making can be tested and understood in isolation, offering a clearer picture of how the overall RL agent functions.

How This Advances RL Interpretability

The authors' modular approach to RL interpretability marks a significant shift from traditional methods. Previously, methods like decision trees or symbolic equations were used to create interpretable policies. While these approaches are effective in some cases, they are computationally expensive and struggle to scale in more complex settings.

By encouraging functional modularity within the RL network, this new approach allows for:

- Scalability: Unlike decision trees or symbolic equations, this modular approach scales more effectively in large, high-dimensional networks.

- Human-Understandable Units: With modular decomposition, each module can be treated as a “cognitive chunk,” a human-friendly explanation of the network’s decision-making process.

- Verification: The ability to directly intervene and verify module behavior provides a more robust form of interpretability, avoiding the pitfalls of post-hoc explanations that may be speculative or subjective.

Real-World Applications

The potential of functionally interpretable RL is vast. Here are just a few examples of where this research could make an impact:

- Autonomous Vehicles: Understanding how RL systems make driving decisions is crucial for ensuring safety. By breaking down decisions into functional modules, we can more easily assess and improve these systems.

- Healthcare: In medical decision support systems, it’s vital to understand why a model recommends a certain treatment. Functional modularity could allow doctors to better trust AI systems by explaining their reasoning in clear, understandable terms.

- Finance: For AI systems involved in trading or investment, understanding the reasoning behind decisions can help with risk management and regulatory compliance.

Conclusion: The Future of Interpretable AI

As AI systems become more integral to our daily lives, the demand for interpretable models will only increase. The modular interpretability framework introduced in this research offers a promising solution by breaking down complex decision-making processes into simpler, more understandable units. Through the use of community detection, modular training, and direct intervention, RL systems can become more transparent and accessible, aligning more closely with human understanding.

The work done by these researchers opens up exciting possibilities for scalable, functionally interpretable RL, providing both theoretical insights and practical tools for improving trust and transparency in AI. As these techniques continue to evolve, we can expect a future where AI not only performs complex tasks but does so in a way that we can all understand.

Stay Informed: Interested in how interpretability is transforming reinforcement learning and AI? Subscribe to our blog for more insights into the latest breakthroughs and trends in AI research.

What's Your Reaction?