Improving Interpretability and Accuracy in Neuro-Symbolic Rule Extraction Using Class-Specific Sparse Filters

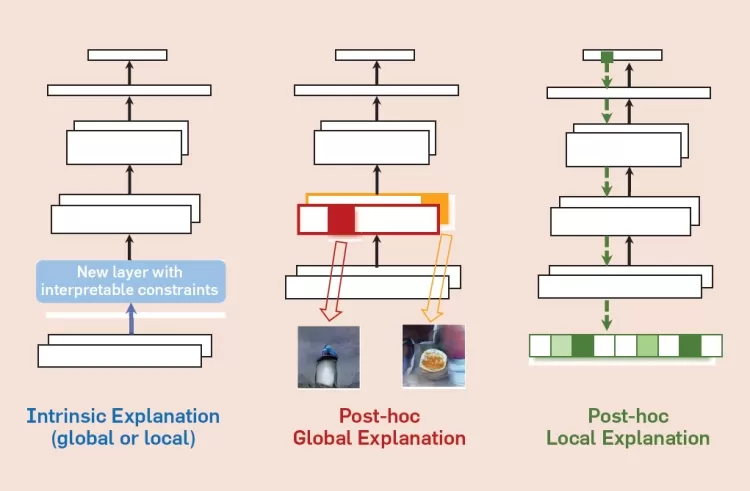

In recent years, significant strides have been made in creating interpretable image classification models that integrate deep learning with symbolic reasoning. These neuro-symbolic models, which combine Convolutional Neural Networks (CNNs) for feature extraction with symbolic rule-sets for classification, have shown great promise

However, one major limitation of these models has been a trade-off between interpretability and accuracy. Specifically, while these models provide understandable rule-based explanations for their decisions, they often suffer from a reduction in accuracy compared to the original CNN models. This paper proposes a novel approach to tackle this issue by using class-specific sparse filters, which enhance both the interpretability and accuracy of neuro-symbolic rule extraction.

Challenges with Traditional Neuro-Symbolic Models

The integration of CNNs with symbolic rule extraction typically involves binarizing the activations of the CNN's filters. This step simplifies the filter outputs, converting continuous values into binary representations (0/1). These binary outputs are then used to create a rule-set, which is interpreted symbolically for classification. While this approach provides interpretability, it comes at a significant cost: accuracy loss. This loss occurs because the original CNN filters were optimized to capture rich, continuous features, and binarizing them discards much of the underlying information.

The NeSyFOLD framework, which is the current state-of-the-art method for neuro-symbolic rule extraction, uses this binarization process to convert filter activations into symbolic rules. However, this process results in a substantial reduction in both the size and accuracy of the generated rule-set. The loss of information due to the post-training binarization undermines the accuracy of the neuro-symbolic model.

The Novel Approach: Class-Specific Sparse Filters

To address this challenge, the authors propose a novel sparsity loss function designed to optimize the CNN training process such that class-specific filter outputs are directly trained to approach binary values (0/1). This is achieved by encouraging only a small subset of filters to be activated for each class, effectively learning sparse filters that are more efficient for the rule extraction process.

The sparsity loss function encourages the network to converge towards a representation where only a few filters respond to the relevant features for each class. This allows the network to learn class-specific filters, which minimizes the information loss during the binarization process, while still preserving the high-level features necessary for accurate classification.

Key Contributions of the Work

-

Sparsity Loss Function: The authors introduce a sparsity loss function that forces the CNN to learn sparse filters with class-specific activations, reducing the information loss that typically occurs during binarization.

-

Improved Accuracy and Smaller Rule-Set: By using this sparsity loss function, the authors demonstrate that the NeSy model achieves a 9% improvement in accuracy over previous methods, while also reducing the rule-set size by 53% on average. This improvement brings the performance of the neuro-symbolic model within just 3% of the original CNN model’s accuracy.

-

Comprehensive Training Strategy Analysis: The authors provide a thorough analysis of five different training strategies that use the sparsity loss function, offering guidance on their effectiveness in different contexts. This analysis provides a roadmap for practitioners looking to integrate this method into their own systems.

Why Does This Work Matter?

The trade-off between interpretability and accuracy has been a long-standing challenge in the field of machine learning, particularly in domains like healthcare and autonomous vehicles, where the ability to explain model decisions is crucial. By improving the accuracy of neuro-symbolic models while maintaining their interpretability, this approach makes these models a more viable alternative to "black-box" CNNs, particularly in safety-critical applications where both performance and transparency are essential.

Results and Comparison to Previous Methods

The experiments conducted show that the proposed sparsity loss function significantly improves the performance of the neuro-symbolic model. The reduction in rule-set size (53%) not only enhances the model's interpretability but also makes it more efficient, which is a critical factor when deploying models in real-world applications. The 9% increase in accuracy, while maintaining the interpretability benefits, demonstrates the potential of this approach to close the gap between interpretability and performance.

Furthermore, the new approach outperforms previous methods such as NeSyFOLD-EBP (Elite BackProp), which also employs sparse filters but lacks the novel sparsity loss function. The improvement in both accuracy and rule-set size sets a new benchmark for interpretable image classification using neuro-symbolic models.

Conclusion

This study introduces an innovative method for improving the interpretability and accuracy of neuro-symbolic rule extraction by using class-specific sparse filters and a novel sparsity loss function. By addressing the issue of information loss during filter binarization, the authors demonstrate that it is possible to maintain high classification accuracy while extracting interpretable symbolic rules. This work not only advances the field of interpretable AI but also offers a practical solution for creating more efficient and accurate models, making them suitable for use in sensitive applications where both transparency and performance are required.

What's Your Reaction?