Data Duplication: A Novel Multi-Purpose Attack Paradigm in Machine Unlearning

In the era of machine learning (ML), the quality of datasets significantly influences the performance and privacy of trained models. While large datasets can enhance model accuracy, they can also introduce significant risks, such as data duplication. Although duplication in training datasets is a well-known issue, its impact on machine unlearning—especially in federated learning (FL) and reinforcement unlearning—has largely been unexplored. This paper introduces a novel multi-purpose attack paradigm that utilizes data duplication to disrupt the unlearning process in machine learning models.

The Challenge of Data Duplication in Unlearning

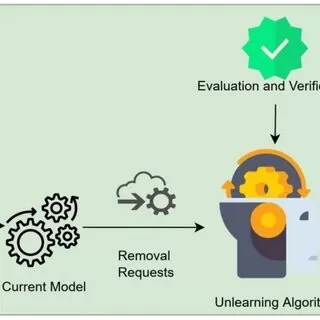

Machine unlearning refers to the process where a model "unlearns" specific data points, especially when users request that their personal data be removed due to privacy concerns or data protection regulations like GDPR. Existing methods of unlearning primarily focus on deleting or approximating the removal of a specific set of data, ensuring that its influence is erased from the model.

However, the presence of duplicated data within the training set complicates the unlearning process. Specifically, if an adversary can duplicate a portion of the training set and later request its removal, it raises the following issues:

- Verification Challenges: When duplicated data is present, it becomes difficult to verify if the unlearning process was successful, as the other duplicate subset may remain in the model, retaining its influence.

- Model Collapse: If the duplicated data is essential to the model’s core features, unlearning one duplicate may still lead to issues in the model’s ability to generalize, ultimately causing degradation or collapse.

- Circumvention of De-duplication: Traditional de-duplication techniques aim to identify and remove duplicate data. However, adversaries can craft near-duplicates that evade detection while still posing a significant challenge to the unlearning process.

Proposed Adversarial Attack Paradigm

The attack paradigm proposed in this research introduces a two-step adversarial approach:

-

Data Duplication: An adversary injects a set of duplicated data into the model’s training dataset. These duplicates could be exact or near-duplicates—synthetically created to appear perceptually different while remaining functionally similar to the original data. The adversary’s goal is to embed these duplicates in a way that they influence the model but remain difficult to detect.

-

Unlearning Challenge: After the model has been trained with the duplicated data, the adversary requests the unlearning of the duplicated data. Due to the presence of other identical or near-identical data points in the model, the unlearning process becomes difficult. The adversary can then verify that, despite the unlearning effort, the model still retains knowledge from the duplicated data.

This attack exploits the flaws in existing machine unlearning methods, which assume that data can be completely and reliably removed. By introducing duplicate or near-duplicate data, the adversary can manipulate the unlearning process and challenge the integrity of model owners' claims of data removal.

Near-Duplication Methods

To circumvent traditional de-duplication techniques, the paper introduces several novel near-duplication methods:

-

Feature Distance Minimization: These methods focus on creating data samples that are similar in feature space to the original data but perceptually distinct. By minimizing the feature distance between the duplicate and original data while maintaining perceptual differences, these near-duplicates are more likely to evade de-duplication techniques.

-

Perceptual Difference Maximization: In addition to minimizing feature distance, the adversary also seeks to maximize the perceptual differences, ensuring that the near-duplicates are not flagged by common de-duplication algorithms that rely on simple feature comparisons.

Impacts on Machine Unlearning

The results of the study reveal several crucial findings:

-

Failure of Gold Standard Unlearning: Retraining from scratch, often considered the gold standard in machine unlearning, does not always effectively unlearn duplicated data. Even when models are retrained, duplicated data can still leave traces in the model, reducing the effectiveness of unlearning efforts.

-

Model Degradation: When duplicated data contains key features, unlearning even one of the duplicates can significantly degrade model performance. This occurs because unlearning disrupts the model’s ability to generalize based on those important features.

-

Evasion of De-duplication Methods: The novel near-duplication techniques can successfully evade standard de-duplication methods, posing a serious challenge to traditional strategies for detecting and removing duplicate data.

Applications in Federated and Reinforcement Unlearning

The implications of this research extend beyond traditional machine unlearning. It introduces the concept of federated unlearning and reinforcement unlearning, where unlearning processes are distributed across clients in federated learning environments or reinforcement learning agents. The attack paradigm demonstrated in this study could undermine these types of unlearning frameworks by exploiting the distributed nature of the models and introducing challenges in verifying unlearning results across multiple nodes or agents.

Conclusions and Future Directions

This paper provides a comprehensive exploration of how data duplication can be used to disrupt the machine unlearning process. The proposed attack paradigm exposes significant vulnerabilities in current machine unlearning practices, especially in distributed and federated environments. The findings highlight the need for more robust unlearning methods that can handle not only direct data removal but also the challenges posed by duplicated and near-duplicate data.

Future research could focus on developing advanced unlearning techniques that are resistant to such attacks, integrating more sophisticated anomaly detection and data verification methods to ensure that models truly forget specific data when requested. Additionally, investigating cross-model duplication detection techniques and enhancing security in federated unlearning environments will be essential in making unlearning practices more reliable and secure.

By laying the groundwork for further study into these vulnerabilities, this work contributes to the ongoing evolution of machine learning models that respect privacy regulations and maintain model integrity against adversarial threats.

What's Your Reaction?