Separate Motion from Appearance: Enhancing Motion Customization in Text-to-Video Diffusion Models

Text-to-video generation models have made significant progress in recent years, enabling the creation of high-quality videos from simple text prompts. However, a major challenge in customizing motion in videos lies in separating the motion concept from the appearance during the adaptation process of these models. Traditional methods that focus on motion customization often suffer from "appearance leakage," where the generated video incorporates unintended elements from the reference videos, leading to poor alignment with text descriptions.

This issue arises primarily due to the entanglement of motion and appearance features during training, where models might encode both the motion concept and appearance details in the same representations. As a result, the ability to generate diverse appearances is compromised when the model is focused on learning a specific motion concept. To address these challenges, recent research has introduced methods like motion LoRAs (Low-Rank Adaptation) and temporal attention purification, but these still struggle with overfitting appearance characteristics.

The Problem of Appearance Leakage

One of the key problems with previous motion customization methods is the "appearance leakage." For instance, when trying to generate a video with a new motion (like a person riding a bicycle), previous methods might also inadvertently introduce background elements (like a window) that were present in the reference video. These unwanted features disrupt the coherence between the generated video and the text description, resulting in videos that do not align well with the user's intent.

The Block-LoRA Approach: Separating Motion and Appearance

In this paper, the authors propose Block-LoRA, a novel method that improves the separation of motion and appearance in the adaptation of text-to-video diffusion models (T2V-DMs). The key idea is to maintain the diversity of appearances while customizing the motion based on reference video clips. To achieve this, the authors introduce two core strategies:

-

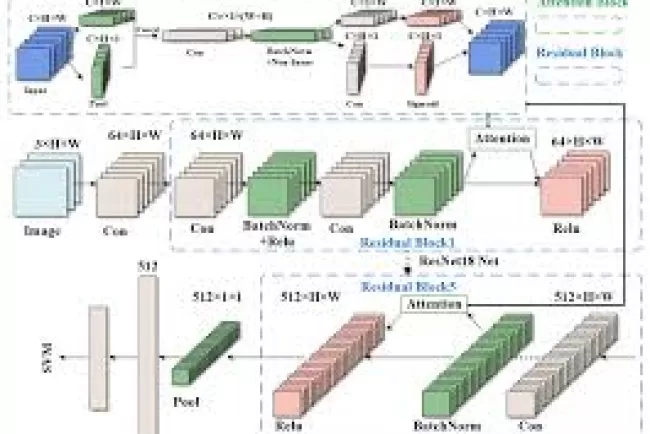

Temporal Attention Purification (TAP): TAP aims to cleanly separate the motion from the appearance by reshaping the temporal attention mechanism in the model. By only adapting the temporal attention with motion LoRAs, the method allows the pretrained Value embeddings in the attention module to focus on creating a new motion while minimizing the incorporation of appearance information. This ensures that the model generates the correct motion dynamics, such as the movement of a person or object, without overfitting to the appearance in the reference video.

-

Appearance Highway (AH): To further enhance appearance generation, AH modifies the skip connections in the U-Net architecture used in T2V-DMs. Instead of connecting the temporal transformers directly to the spatial transformers, AH redirects these connections to the output of the spatial attention modules. The rationale behind this is that the skip connections typically carry high-frequency appearance information, and altering their paths ensures that the appearance generation retains the features of the original T2V-DM, while the motion information is customized through the TAP method.

Experimental Validation

The proposed method was evaluated through extensive experiments on text-to-video generation tasks, using various benchmarks to test its effectiveness. The results demonstrated that Block-LoRA significantly outperformed previous state-of-the-art methods by generating videos with better motion consistency and appearance fidelity.

- Appearance Alignment: The generated videos were more aligned with the text descriptions, showing that the appearance was accurately modeled while maintaining the motion dynamics specified by the reference videos.

- Motion Consistency: The method successfully adapted the motion concept from the reference videos without introducing unwanted appearance features, effectively mitigating the appearance leakage problem.

- Computational Efficiency: The approach not only improved video generation quality but also maintained computational efficiency, making it suitable for real-time applications.

Conclusion

The Block-LoRA framework presents a significant advancement in motion customization for text-to-video generation. By introducing Temporal Attention Purification (TAP) and Appearance Highway (AH), the method successfully separates motion and appearance, enabling more accurate and diverse video generation. The experimental results demonstrate that Block-LoRA can generate videos that are both highly customized in terms of motion and aligned with textual descriptions in terms of appearance, addressing key limitations in prior approaches.

This work paves the way for more effective and efficient motion customization in text-to-video diffusion models, bringing us closer to generating high-quality, contextually accurate videos with customized movements.

What's Your Reaction?