Revisiting Mixture Models for Multi-Agent Simulation: Enhancing Autonomous Driving with Realistic Behavior Generation

Simulation plays a crucial role in evaluating and testing autonomous driving systems. One of the primary challenges in this space is generating realistic multi-agent behaviors that can mimic real-world scenarios. As autonomous driving technology continues to evolve, it's important to develop robust systems capable of handling complex traffic situations with human-like interactions. In this context, the use of mixture models—statistical models that represent multimodal behaviors—is becoming increasingly popular in generating realistic multi-agent simulations.

In this blog post, we explore a recent study on mixture models for multi-agent simulation within a unified framework, focusing on a new approach called UniMM (Unified Mixture Model). This study introduces novel ways of addressing key challenges in multi-agent simulation, including behavioral multimodality and distributional shifts that occur in closed-loop simulations. Let’s dive deeper into the methodology and findings of this study and explore how it can improve the safety and realism of autonomous driving simulations.

The Challenge of Multi-Agent Simulation in Autonomous Driving

In autonomous driving, simulations are an essential part of evaluating the system's performance in various traffic scenarios. However, creating simulations that accurately reflect real-world driving conditions is no small feat. The two primary challenges in this area are:

-

Behavioral Multimodality: In real-life traffic, agents (i.e., cars, pedestrians, cyclists) exhibit a range of possible behaviors in the same scenario. For example, a car may choose to change lanes, overtake another vehicle, or slow down, depending on various factors such as road conditions, traffic, or the behavior of other agents. Simulating this variety in behavior, or multimodality, is critical for generating realistic scenarios.

-

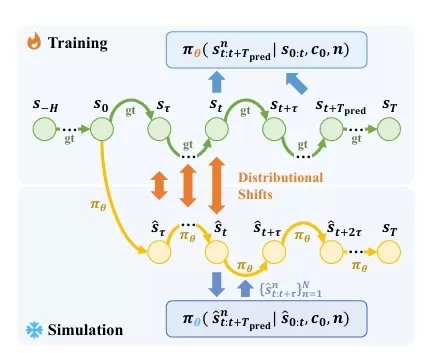

Distributional Shifts in Closed-Loop Simulations: When the model generates a simulation in a closed-loop fashion, it can encounter states that were not well-represented during training, causing errors to accumulate. This phenomenon, known as distributional shifts, poses a challenge for ensuring the simulation remains realistic as it progresses over time.

To address these issues, the study introduces a unified approach to mixture models, which helps capture the diverse range of behaviors while mitigating distributional shifts.

Revisiting Mixture Models: Continuous vs. Discrete

The study revisits mixture models, which have been a mainstay in generating multimodal agent behaviors. Mixture models allow us to represent different behaviors (or components) of agents as different distributions within the model. The challenge lies in how these mixture components are structured and trained.

Continuous Mixture Models

In the past, continuous mixture models, such as Gaussian Mixture Models (GMMs), have been used for multi-agent simulations. These models predict agent trajectories by combining different Gaussian distributions, with each component representing a different potential behavior of the agent. The problem with these models, however, is their limited ability to represent behaviors with complex, non-Gaussian distributions. Moreover, they require long prediction horizons, which can lead to reward hacking (where the model exploits the reward system without actually learning desirable behaviors) and generalization issues.

GPT-like Discrete Models

On the other hand, recent advancements have introduced GPT-like discrete models, inspired by transformer architectures. These models discretize trajectories into motion tokens and then use a next-token prediction task to generate agent behaviors. These models have shown great success in improving the realism of simulations, as they can handle a broader range of behavioral modalities.

Interestingly, GPT-like models can also be considered a type of mixture model, where each motion token corresponds to a discrete mixture component. The difference lies in how they handle the modeling process: continuous models rely on regression, while discrete models use tokenization and categorical prediction.

Bridging the Gap: The UniMM Framework

The study introduces the UniMM (Unified Mixture Model) framework, which combines both continuous and discrete approaches to generate more realistic and versatile multi-agent simulations. By considering the mixture model as a general framework, the authors suggest that both continuous and discrete models are configurations within this unified approach.

The UniMM framework explores several configurations:

- Positive Component Matching: This determines which mixture component best matches the observed behavior.

- Continuous Regression: This helps fine-tune the model to better handle continuous variables and behaviors.

- Prediction Horizon: This controls how far into the future the model predicts, which is crucial for multi-agent interactions.

- Number of Components: This refers to the number of behavioral modes an agent can exhibit, which is key to capturing multimodal behavior accurately.

Key Findings and Insights

1. Model Configurations Don’t Fully Explain the Performance Gap

While model configurations, such as the number of components or the inclusion of continuous regression, are important for improving simulation realism, they do not completely account for the performance gap between continuous and discrete mixture models. In other words, simply changing model configurations may not be enough to achieve optimal performance.

2. The Importance of Closed-Loop Samples

The study demonstrates that the use of closed-loop samples is key to improving the performance of multi-agent simulations. In closed-loop sampling, the predictions made by the model are used as inputs for future predictions, allowing the model to generate more realistic scenarios. GPT-like models inherently use closed-loop samples through their motion tokenization process, making them particularly effective at handling distributional shifts during simulations.

By generating closed-loop samples, the model can ensure that the behavior predictions remain consistent even as the simulation progresses, reducing errors and improving the realism of the generated scenarios.

3. Addressing Shortcut Learning and Off-Policy Learning

One of the challenges with closed-loop samples is that they can lead to shortcut learning, where the model exploits shortcuts in the training data to generate superficially correct outputs that don’t reflect real-world behavior. The study identifies and addresses this issue by incorporating strategies to ensure that the model learns to generalize better, rather than memorizing training data.

Additionally, the off-policy learning problem—where the model learns from a distribution of data that doesn’t align with its current policy—can be mitigated by using more robust training techniques that focus on reducing the impact of off-policy data.

Experimentation and Results

The authors tested their unified mixture model (UniMM) framework using the WOSAC benchmark, which evaluates multi-agent simulations against real-world data. They compared different variants of the UniMM framework, including anchor-free models and anchor-based models. Key results include:

- Anchor-free models with fewer components were able to generate competitive simulations, even addressing concerns about limited component numbers.

- Anchor-based models performed well in terms of efficiency and effectiveness, showing that continuous models still hold value in multi-agent simulation scenarios.

Overall, the UniMM framework outperformed existing methods, demonstrating state-of-the-art performance on the WOSAC benchmark.

Conclusion and Future Directions

This study highlights the importance of mixture models in generating realistic multi-agent simulations for autonomous driving. By combining continuous and discrete approaches within the UniMM framework, the authors offer a versatile solution to the challenges of behavioral multimodality and distributional shifts in simulations.

Moving forward, future research can explore further refinements in closed-loop sample generation, tackle issues like shortcut learning, and continue improving the generalization of multi-agent models. This work sets the stage for more realistic and effective simulations, paving the way for safer and more reliable autonomous driving systems.

Stay Updated: Interested in the latest developments in multi-agent simulation for autonomous driving? Subscribe to our blog for more insights into AI safety and simulation techniques.

Join the Discussion: What are your thoughts on using mixture models for autonomous driving simulations? Share your insights and ideas in the comments below!

What's Your Reaction?