Optimizing Code Runtime Performance through Context-Aware Retrieval-Augmented Generation

In the ever-evolving world of software development, optimizing code to run more efficiently is a crucial task, especially as hardware improvements slow down and computational demands rise. Traditional compilers handle lower-level tasks, but high-level optimizations like refining logic, control flow, and eliminating inefficiencies still largely rely on human expertise. Recent advancements in large language models (LLMs) have shown promise in addressing some of these optimization challenges, but effectively leveraging the deep, contextual understanding that human programmers bring to the table remains an area for improvement.

To bridge this gap, this study introduces AUTOPATCH, a context-aware framework that enables LLMs to perform in-depth program optimization by mimicking human strategies. By combining structured program analysis with machine learning, AUTOPATCH provides a new approach to code optimization, promising higher efficiency in runtime performance. This article outlines how AUTOPATCH achieves this goal through an analogy-driven framework and integrates historical code examples and control flow graph (CFG) analysis for a more intelligent, context-aware learning process.

The Need for Automated Program Optimization

Program optimization remains a challenging task, particularly when dealing with complex code structures where identifying bottlenecks and redundant operations requires deep understanding. While manual optimization by skilled programmers remains the gold standard, it’s time-consuming and susceptible to human biases. This is especially problematic in large-scale projects or systems that require frequent updates and optimizations.

Current automated tools like traditional compilers focus primarily on low-level optimization techniques such as register allocation and instruction scheduling. However, more nuanced tasks, such as optimizing control flow, refining logic, or identifying complex inefficiencies, still require manual intervention. The potential for LLMs to perform these tasks automatically offers significant advantages, particularly by reducing the reliance on human intervention, improving scalability, and enabling faster updates.

The AUTOPATCH Approach: Mimicking Human Cognitive Processes

The AUTOPATCH framework is designed to address the key challenge of automated program optimization by incorporating human-like strategies into LLMs. Drawing inspiration from how human programmers optimize code—by identifying inefficiencies in control flow and recognizing recurring optimization patterns—AUTOPATCH includes three primary components:

-

Formalizing Methods to Incorporate Domain Knowledge: This step involves analyzing how human programmers typically detect inefficiencies by examining the logical flow of the code. By understanding the ways in which programmers refine logic and control flow, AUTOPATCH can apply these strategies to automate the optimization process.

-

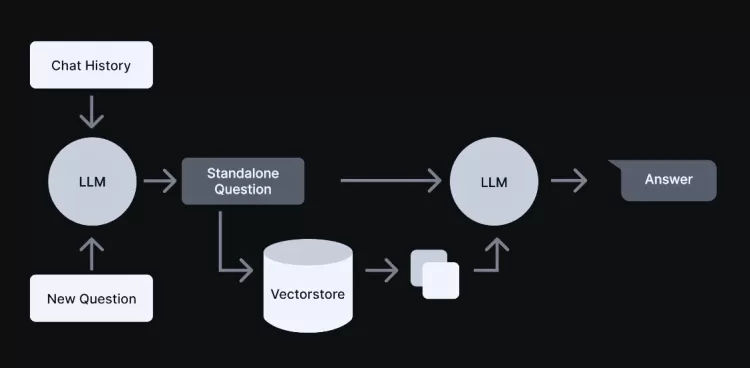

Context-Aware Learning with Historical Examples: AUTOPATCH integrates retrieval-augmented generation (RAG) to leverage historical examples of code optimizations. The system analyzes previous instances where optimizations were successfully applied, pulling from a large database of code transformations. By learning from these examples, AUTOPATCH can apply similar optimizations to new pieces of code, adapting and improving its efficiency over time.

-

Optimized Code Generation Through In-Context Prompting: Using the insights gathered from human-like analysis and historical examples, AUTOPATCH generates optimized code. The framework employs enriched context, integrating lessons learned from past optimizations with structural insights drawn from the target code to produce high-quality, optimized code with better runtime performance.

How the System Works: CFG Analysis and Optimization

At the core of AUTOPATCH is its ability to analyze and modify the control flow of programs. Control flow graphs (CFGs) serve as the foundation for identifying structural inefficiencies, such as redundant operations, unnecessary loops, or complex decision-making paths. By comparing the unoptimized code’s CFG to the optimized version, the system identifies key differences and transformation opportunities.

For example, a typical optimization involves replacing inefficient loops with more efficient versions, reducing computational overhead, and improving memory access patterns. By analyzing the structural differences between the original and optimized code (denoted as ∆G), AUTOPATCH learns to apply these insights to new codebases.

Leveraging Historical Data for Optimized Code Generation

A critical feature of AUTOPATCH is its ability to retrieve and use historical code examples. The system pulls from a database of previously optimized code snippets, using cosine similarity to find the closest matching example based on the CFG analysis. This historical data provides context for the LLM, guiding the generation of optimized code.

The key to AUTOPATCH's efficiency is its retrieval-augmented approach. The framework uses the retrieved examples not only as raw patterns but also incorporates the underlying rationales behind the optimizations. This allows AUTOPATCH to generate code that is not just syntactically correct, but also semantically optimized for runtime performance.

Experimental Results and Improvements

To evaluate the effectiveness of AUTOPATCH, the team conducted experiments using the IBM Project CodeNet dataset, which includes a variety of code samples from different domains. AUTOPATCH was compared to GPT-4o, a state-of-the-art LLM, in terms of runtime efficiency. The results demonstrated that AUTOPATCH achieved a 7.3% improvement in execution efficiency over GPT-4o, highlighting its ability to bridge the gap between manual optimization techniques and automated code refinement.

This improvement was especially notable in the context of larger, more complex codebases, where traditional optimization methods might struggle to identify and address deep structural inefficiencies. By leveraging both human expertise and historical knowledge, AUTOPATCH was able to adapt to different code contexts and generate optimized code more effectively.

Future Directions

While the results of AUTOPATCH are promising, there is still significant potential for improvement. Future research could focus on expanding the range of optimization techniques it can handle, as well as refining the retrieval mechanisms to further improve context awareness. Additionally, the integration of neural program analysis could enhance the system’s understanding of program semantics, allowing it to make even more intelligent optimizations.

The scalability of AUTOPATCH is another area for exploration. As more code is added to the training data, the system’s ability to generate optimized code in real-time could be further enhanced, making it an indispensable tool for developers working on large-scale software projects.

Conclusion

AUTOPATCH represents a significant advancement in the field of automated code optimization. By combining human-inspired strategies with the power of large language models, it provides a scalable and effective solution for refining code performance. With further improvements in retrieval mechanisms and neural program analysis, AUTOPATCH has the potential to revolutionize the way we optimize software, offering faster, more efficient development cycles, and ultimately contributing to the creation of higher-performing software systems.

By leveraging past experiences and combining them with contextual understanding, AUTOPATCH offers a pathway to more intelligent, scalable, and automated software optimization—moving closer to the goal of fully autonomous code improvement.

What's Your Reaction?