Leveraging Vision Transformers and Supervised Contrastive Loss for Robust Deepfake Detection in Diverse Environments

Introduction: Tackling the Deepfake Challenge

In an era where digital content is easy to manipulate, deepfakes have emerged as a significant threat to misinformation, privacy, and security. Deepfake technology enables the creation of hyper-realistic videos and images, making it difficult to distinguish between authentic and falsified content. As this technology becomes more accessible, detecting deepfakes has become a crucial task to protect both individuals and organizations from its harmful consequences. The IEEE SP Cup 2025: Deepfake Face Detection in the Wild (DFWild Cup) provides an excellent opportunity to address this challenge by creating robust solutions capable of detecting deepfakes across diverse datasets and real-world scenarios.

This report presents an innovative approach that integrates advanced backbone models such as MaxViT, CoAtNet, and EVA-02, all fine-tuned using supervised contrastive loss to enhance feature separation for deepfake detection. Through the careful combination of these models, we aim to build a robust system that can generalize across multiple datasets, delivering high accuracy in detecting both real and fake images. Our proposed solution achieved a remarkable accuracy of 95.83% on the validation dataset, showcasing its potential for practical application.

Understanding the Core Concepts

-

Deepfake Detection: Deepfake detection refers to the task of identifying content that has been digitally altered to misrepresent reality. Detecting deepfakes involves recognizing inconsistencies in the facial structure, lighting, and motion that distinguish fake content from real media.

-

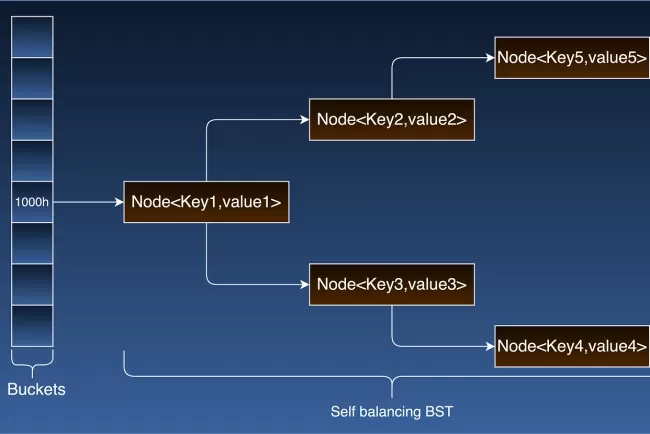

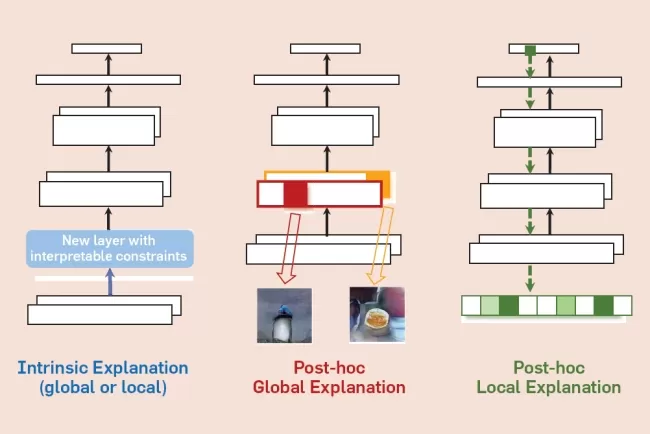

Vision Transformers (ViT): Vision Transformers represent a shift from traditional convolutional neural networks (CNNs) by employing self-attention mechanisms to process image data. Unlike CNNs, which rely on fixed kernel sizes to detect patterns, ViTs treat images as sequences of patches and apply attention mechanisms to capture global relationships across the image.

-

Supervised Contrastive Loss: Supervised contrastive loss is a loss function designed to improve the separation of features in the embedding space. By bringing similar samples closer together and pushing dissimilar samples apart, contrastive loss helps the model learn more discriminative features, enhancing its ability to classify images accurately.

Leveraging Vision Transformers for Deepfake Detection

Approach for detecting deepfakes consists of three main stages, each designed to address a specific challenge in the task of deepfake detection.

Stage 1: Training the Backbone with Supervised Contrastive Loss

In the first stage of training, we use advanced backbone models—MaxViT, CoAtNet, and EVA-02. These models were selected for their complementary strengths:

-

MaxViT: This hybrid model integrates convolutional layers with Vision Transformers, allowing it to effectively capture both local and global spatial features. Its ability to detect fine-grained manipulations makes it ideal for deepfake detection.

-

CoAtNet: Combining convolutional networks and transformers, CoAtNet excels at capturing multi-scale features, making it highly effective for detecting both local and global manipulations in deepfake images.

-

EVA-02: This model leverages masked image modeling and vision-language alignment, providing a global understanding of image content. Its ability to capture global features helps identify inconsistencies such as unnatural facial proportions or lighting discrepancies in deepfake images.

by fine-tune these models using supervised contrastive loss, ensuring a strong separation between real and fake images in the embedding space. By minimizing the distance between similar images and maximizing the distance between dissimilar ones, we enhance the model's ability to distinguish between authentic and manipulated content.

Stage 2: Freezing the Backbone and Training the Classifier

Once the backbone models have been fine-tuned, we freeze their parameters and focus on training the classification heads. The classification heads are designed to predict whether an image is real or fake based on the features extracted by the backbone models. This two-step training process allows us to leverage the pretrained models' ability to capture complex features while adapting the final layers to the specific task of deepfake detection.

Stage 3: Majority Voting Ensemble for Improved Generalization

After training the classifiers, we employ an ensemble strategy to combine the predictions from MaxViT, CoAtNet, and EVA-02. By using majority voting, the final prediction is determined by the model that predicts the most frequently. This ensemble approach enhances the system's robustness, allowing it to generalize better to unseen data and diverse deepfake techniques.

Dataset and Augmentation Strategies

DFWild-Cup 2025 Dataset

The competition dataset, DFWild-Cup 2025, consists of real and fake facial images from various datasets, including Celeb-DF, FaceForensics++, and DeepfakeDetection. This diverse collection of images provides a comprehensive testbed for evaluating deepfake detection systems. The training set includes 262,160 images, with 42,690 real images and 219,470 fake images, while the validation set consists of 1,548 real and 1,524 fake images.

Secondary Dataset Generation

To ensure our model generalizes well across a wide range of deepfake techniques, we generated additional fake images using several advanced deepfake generation methods, including face-swapping, face-reenactment, and face-editing techniques. These methods contributed 12,200 fake images, further enriching the dataset and improving the model's ability to detect manipulations from diverse sources.

Offline and Online Augmentation

To improve the model's performance and robustness, we applied both offline and online augmentation strategies:

-

Offline Augmentation: We applied transformations like random rotation, brightness adjustments, and hue shifts to real images in the original dataset. This generated 21,335 new real examples that were added to the training set, increasing the diversity of real samples and preventing overfitting.

-

Online Augmentation: During training, we dynamically applied augmentation with a fixed probability for each image. This approach introduced variability into the training data, ensuring the model could adapt to real-world variations and learn more robust features.

Results and Evaluation

Our model achieved an impressive 95.83% accuracy on the validation dataset, demonstrating its effectiveness in detecting deepfakes across various scenarios. The combination of MaxViT, CoAtNet, and EVA-02, fine-tuned with supervised contrastive loss and enhanced by data augmentation and ensemble learning, proved to be a powerful approach for deepfake detection.

Conclusion: Advancing Deepfake Detection with Ensemble Vision Transformers

This report outlines a robust approach for detecting deepfakes in diverse datasets using a combination of advanced Vision Transformers and supervised contrastive loss. By leveraging MaxViT, CoAtNet, and EVA-02, and employing an ensemble strategy, we have created a deepfake detection system capable of generalizing well across various deepfake generation methods. The results achieved in the IEEE SP Cup 2025 competition highlight the effectiveness of this approach, offering a promising solution to the growing challenge of detecting deepfakes in real-world scenarios.As deepfake technology continues to evolve, how do you think future models can adapt to new, unseen manipulation techniques? Would incorporating more domain-specific features improve detection further, or is the focus better placed on enhancing model robustness across varied datasets?

What's Your Reaction?