HAPFL: A New Approach to Heterogeneity-Aware Personalized Federated Learning

As the Internet of Things (IoT) continues to grow at an unprecedented rate, with billions of devices generating massive amounts of data, the ability to process and learn from this data in a secure and scalable manner is more critical than ever. Federated Learning (FL), a decentralized machine learning paradigm, has emerged as a promising solution to address the growing concerns about data privacy and security. It allows for the training of machine learning models across multiple decentralized devices without the need to exchange raw data, which makes it especially suitable for IoT applications.

However, despite its promise, federated learning faces significant challenges, particularly when it comes to handling heterogeneous devices and mitigating issues like straggling latency in networks with varying device capabilities. In this blog, we explore HAPFL (Heterogeneity-aware Personalized Federated Learning), a novel approach that tackles these challenges and advances the state of federated learning.

What is HAPFL?

HAPFL is a heterogeneity-aware personalized federated learning framework designed to improve the efficiency and accuracy of federated learning by addressing two main problems:

-

Straggler Latency: This problem arises when devices with lower computational capabilities take longer to train models, causing delays for high-performance devices that have completed their tasks faster.

-

Model Heterogeneity: In real-world federated learning systems, different clients may have varying computational resources, data sizes, and training capacities, leading to imbalances in the contributions of each device during model aggregation.

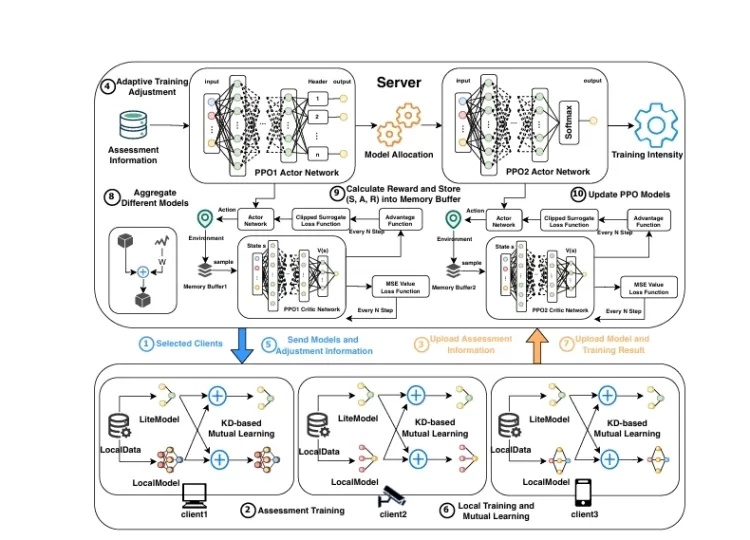

HAPFL leverages two deep reinforcement learning (DRL) agents to dynamically allocate training resources to clients based on their computational capabilities. This not only minimizes straggling latency but also helps balance the training intensities across heterogeneous devices. In addition, HAPFL introduces a LiteModel, a lightweight homogeneous model deployed across all devices. This model interacts with the personalized local models of clients through knowledge distillation, allowing for efficient global model aggregation.

Key Features of HAPFL:

-

Reinforcement Learning for Dynamic Resource Allocation: HAPFL uses DRL agents to adaptively determine the size and complexity of the models assigned to each client based on their training performance and computational capabilities. This ensures that clients with more computational power can train with higher intensities, while less powerful clients are not overburdened.

-

LiteModel for Global Knowledge Sharing: Each client is equipped with a LiteModel that serves as a universal baseline model. This LiteModel is involved in mutual learning with the local model, transferring knowledge across the entire client network. It helps ensure that the global model is aggregated consistently, even in heterogeneous environments.

-

Efficient Model Aggregation Using Knowledge Distillation: To mitigate the impacts of low-contributing clients, HAPFL employs a knowledge distillation-based aggregation method, which ensures that the global model benefits from the knowledge accumulated in all local models. This method also uses information entropy and accuracy weighting to adjust the contributions of each model during aggregation.

How Does HAPFL Improve Federated Learning?

1. Reducing Straggling Latency

One of the most significant improvements HAPFL offers is in reducing the latency caused by slower devices during model training. By dynamically adjusting the training intensity for each client, HAPFL ensures that faster devices are not delayed by slower ones. This dynamic allocation leads to better resource utilization and faster training times overall.

2. Improving Accuracy with Personalized Models

Federated learning typically struggles with model heterogeneity, as clients with different capabilities may not perform equally well with the same global model. HAPFL solves this issue by allowing clients to personalize their models based on their specific data and computational power. This ensures that each client can train a model that is well-suited to its environment, ultimately leading to more accurate and robust models.

3. Efficient Global Model Aggregation

In traditional federated learning, model aggregation can be challenging, especially when clients contribute models with varying levels of performance. HAPFL introduces the LiteModel and uses knowledge distillation to transfer knowledge across all models. This allows for more effective aggregation, as the LiteModel helps to bridge gaps between the local models of clients with different capabilities.

Experimental Results

To evaluate the effectiveness of HAPFL, extensive simulations were conducted using three well-known datasets: MNIST, CIFAR-10, and ImageNet-10. The results of these experiments demonstrate the superior performance of HAPFL compared to traditional federated learning approaches:

-

Increased Accuracy: HAPFL improved model accuracy by up to 7.3%, showing that its dynamic training allocation and personalized models yield better results in heterogeneous environments.

-

Reduced Training Time: HAPFL reduced overall training time by 20.9% to 40.4%, thanks to more efficient resource allocation and minimized straggler latency.

-

Decreased Straggling Latency: Straggling latency differences were reduced by 19.0% to 48.0%, highlighting the effectiveness of HAPFL’s dynamic resource allocation strategy.

These results underscore the potential of HAPFL to address key challenges in federated learning, making it a promising framework for future IoT and other decentralized machine learning applications.

Conclusion

HAPFL introduces a heterogeneity-aware, personalized federated learning approach that effectively tackles challenges related to straggler latency and model heterogeneity. By leveraging reinforcement learning for dynamic resource allocation and incorporating a LiteModel for global knowledge sharing, HAPFL offers a scalable solution for federated learning in heterogeneous environments.

The experimental results demonstrate significant improvements in accuracy, training time, and latency, making HAPFL an exciting development in the field of federated learning. As IoT devices continue to proliferate, frameworks like HAPFL will be essential for ensuring that machine learning can scale efficiently, securely, and reliably across a wide variety of devices.

What do you think of HAPFL's approach to federated learning? Do you see it as a viable solution for real-world applications? Let us know your thoughts in the comments below!

What's Your Reaction?